Cao, Chenjie, Xinlin Ren, and Yanwei Fu. "MVSFormer: Multi-View stereo by learning robust image features and temperature-based depth." arXiv preprint arXiv:2208.02541 (2022).

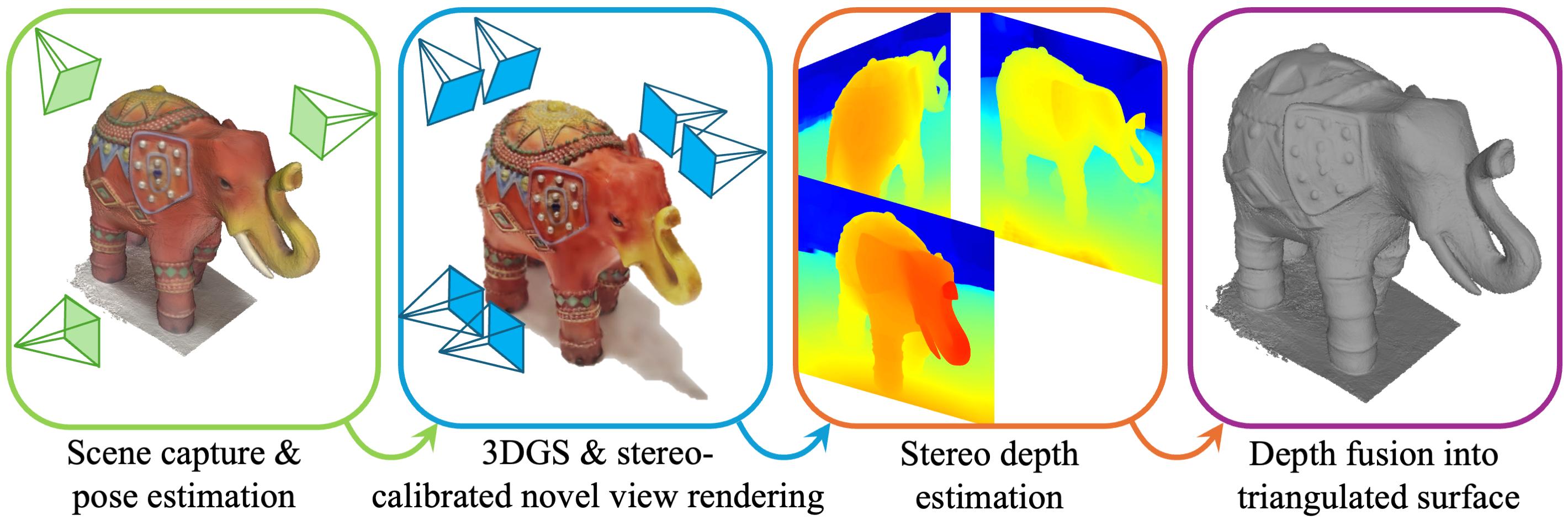

Huang, Binbin, et al. "2D Gaussian Splatting for Geometrically Accurate Radiance Fields." SIGGRAPH 2024 Conference Papers, Association for Computing Machinery, 2024, doi:10.1145/3641519.3657428.

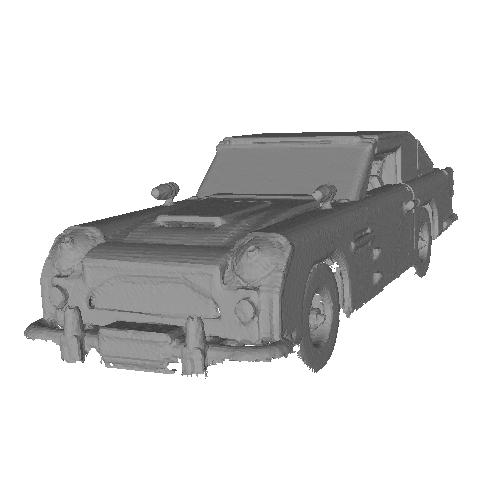

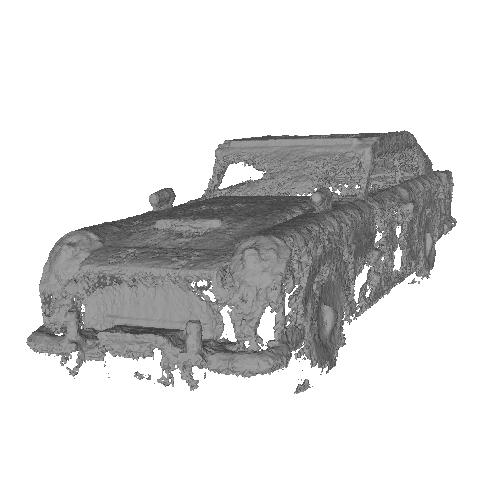

Jensen, Rasmus, et al. "Large Scale Multi-View Stereopsis Evaluation." 2014 IEEE Conference on Computer Vision and Pattern Recognition, IEEE, 2014, pp. 406-413.

Kerbl, Bernhard, et al. "3D Gaussian splatting for real-time radiance field rendering." ACM Transactions on Graphics 42.4 (2023): 1-14.

Knapitsch, Arno, et al. "Tanks and Temples: Benchmarking large-scale scene reconstruction." ACM Transactions on Graphics (ToG) 36.4 (2017): 1-13.

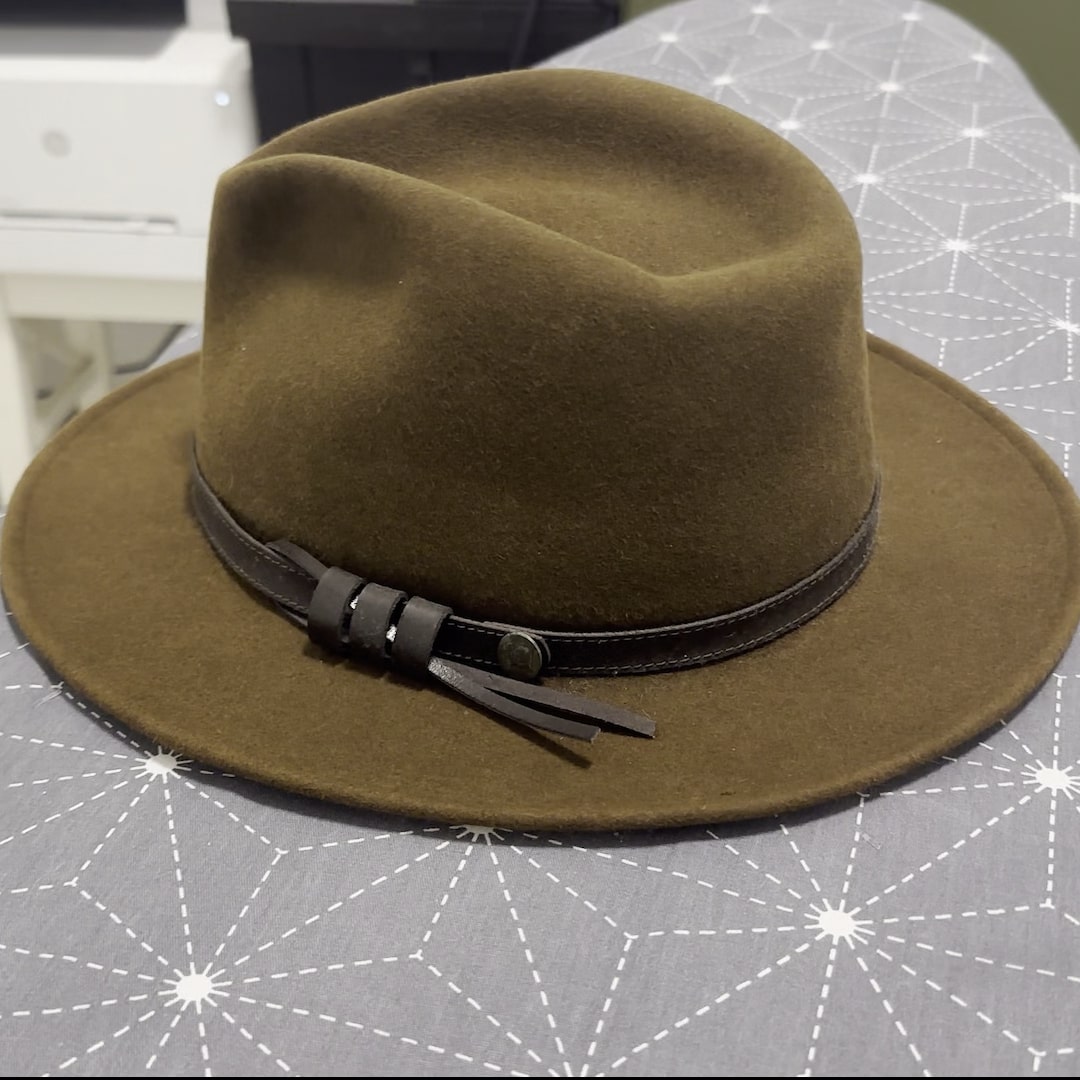

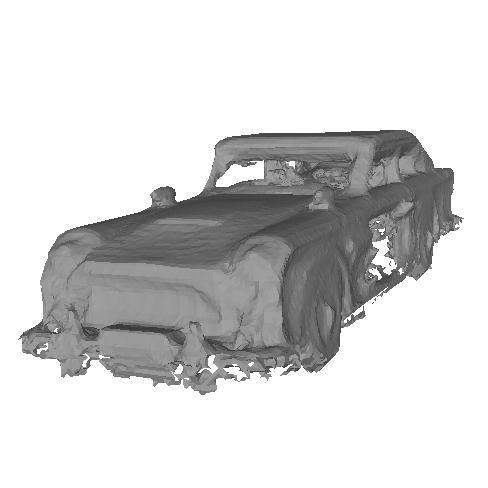

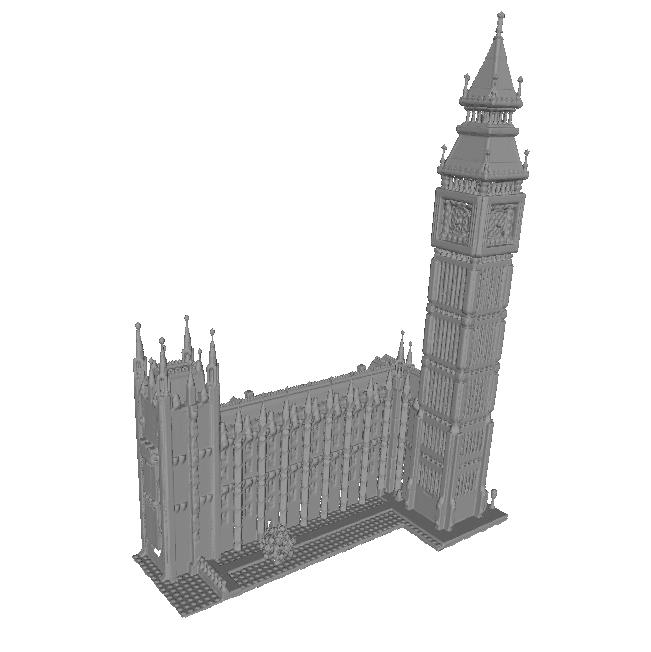

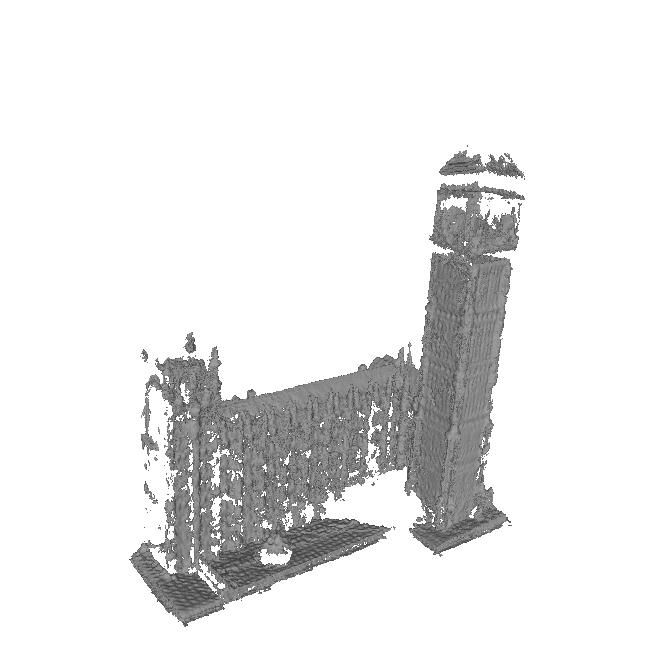

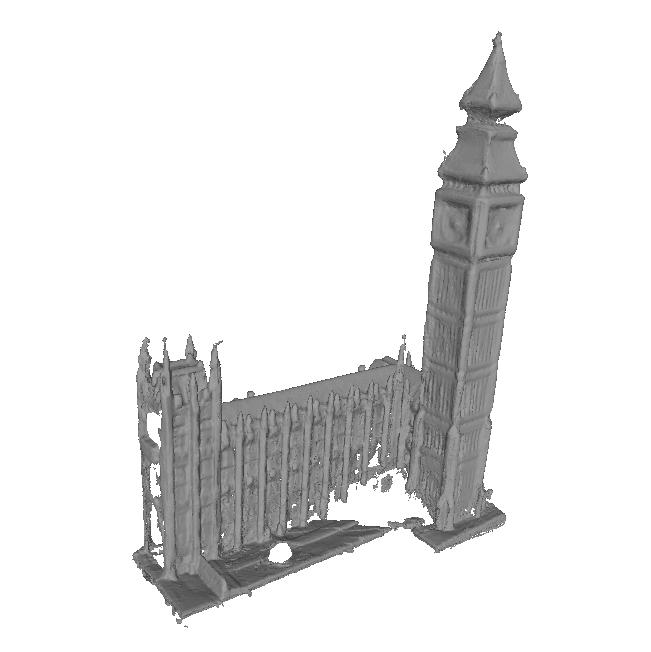

Li, Kejie, et al. "MobileBrick: Building LEGO for 3D reconstruction on mobile devices." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2023.